- INSTALL APACHE SPARK ON CLOUD9 HOW TO

- INSTALL APACHE SPARK ON CLOUD9 DRIVER

- INSTALL APACHE SPARK ON CLOUD9 FULL

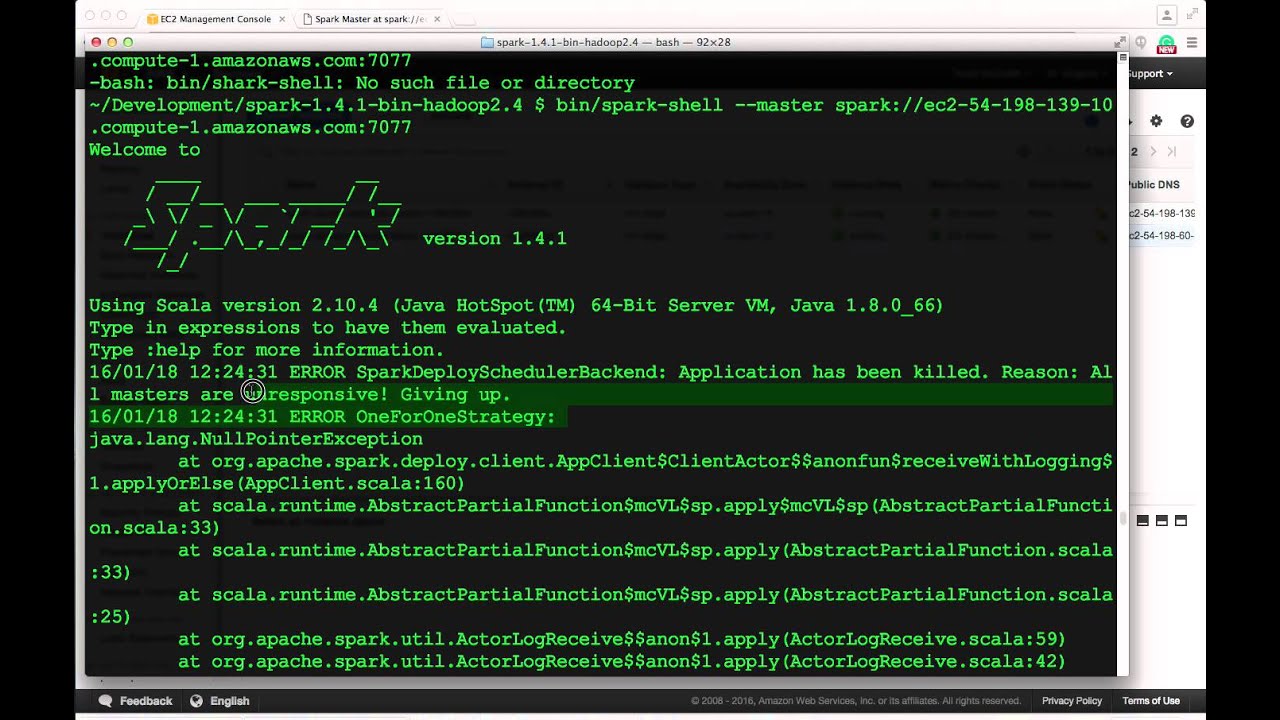

Finally, we demonstrated the resilience of our Masters thanks to Zookeeper. We ran both the Master and Slave daemons on the same node. **Conclusion **: We covered the basics of setting up Apache Spark on an AWS EC2 instance. You’ll see that Zookeeper elected Master 2 as the primary master :įrom the Spark UI of Master 2, you’ll see that all slave nodes are now attached : If you want to visualize what’s going on : When you’ll stop Master 1, the Master 2 will be elected as the new Master and all Worker nodes will be attached to the newly elected master. What if you shutdown Master 1? Zookeeper will handle the selection of a new Master! All Worker nodes will be attached to the Master 1 : Once all slave nodes are running, reload your master browser page. tar.gz file by executing the command bellow : On each node, extract the software and remove the. Make sure to repeat this step for every node. On each node, execute the following command : If you want to choose the version 2.4.0, you need to be careful! Some software (like Apache Zeppelin) don’t match this version yet (End of 2018).įrom Apache Spark’s website, download the tgz file :

INSTALL APACHE SPARK ON CLOUD9 HOW TO

If you don’t remenber how to do that, you can check the last section ofįor the sake of stability, I chose to install version 2.3.2. Make sure an SSH connection is established. Connect via SSH on every node except the node named Zookeeper : Java should be pre-installed on the machines on which we have to run Spark job. Standalone mode is good to go for developing applications in Spark.

INSTALL APACHE SPARK ON CLOUD9 DRIVER

Both driver and worker nodes run on the same machine. This is the simplest way to deploy Spark on a private cluster. Along with that, it can be configured in standalone mode.įor this tutorial, I choose to deploy Spark in Standalone Mode. Spark can be configured with multiple cluster managers like YARN, Mesos, etc.

INSTALL APACHE SPARK ON CLOUD9 FULL

The goal of this final tutorial is to configure Apache-Spark on your instances and make them communicate with your Apache-Cassandra Cluster with full resilience. From the page, you can see my master and slave service is started. Once the service is started go to the browser and type the following URL access spark page. start-master.sh start-workers.sh spark://localhost:7077.

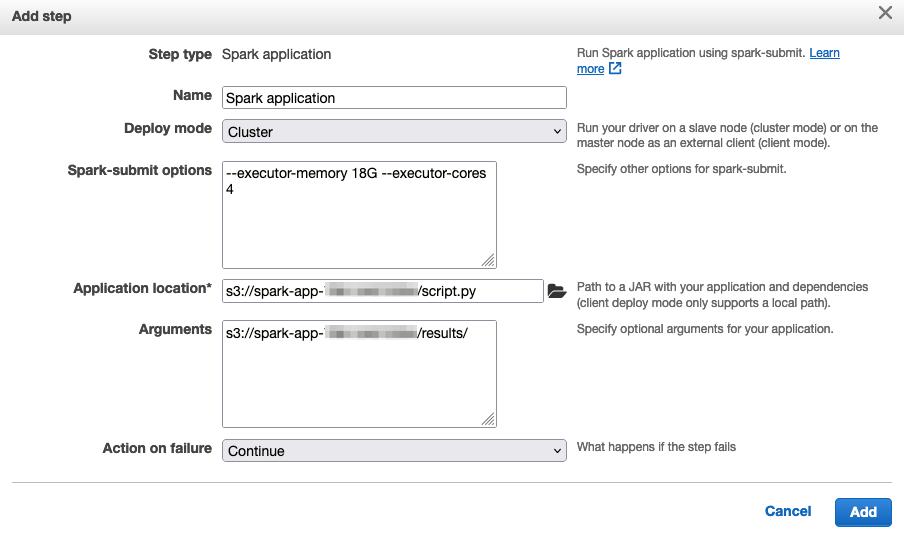

Write a simple wordcount Spark job in Java, Scala, or Python, then run the job on a Dataproc cluster. This tutorial will be divided into 5 sections. This tutorial show you how to run example code that uses the Cloud Storage connector with Apache Spark.

The “election” of the primary master is handled by Zookeeper. We’ll go through a standard configuration which allows the elected Master to spread its jobs on Worker nodes. This topic will help you install Apache-Spark on your AWS EC2 cluster. Add dependencies to connect Spark and Cassandra

0 kommentar(er)

0 kommentar(er)